Physical Address

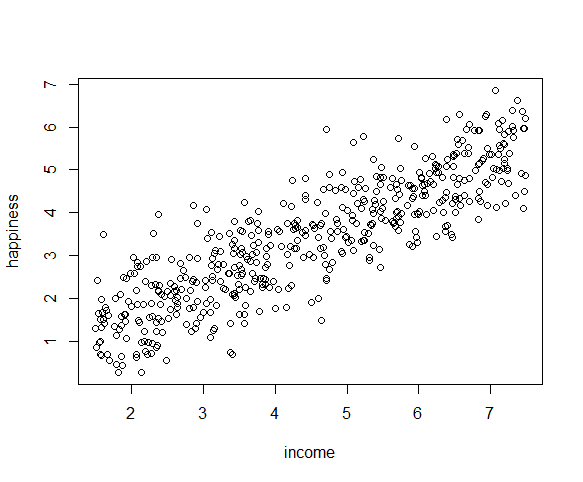

Reporting the results of simple linear regression We found a significant relationship between income and happiness (p < 0.001, R 2 = 0.73 ± 0.0193), with a 0.73-unit increase in reported happiness for every $10,000 increase in income. Reporting the results of multiple linear regression In our survey of 500 towns, we found significant relationships between the frequency of biking to work and the frequency of heart disease and the frequency of smoking and frequency of heart disease (p < 0 and p < 0.001, respectively).

Linear Regression in R | A Step-by-Step Guide and Examples

Published on February 25, 2020 by Rebecca Bevans. Revised on June 22, 2023.

Linear regression is a regression model that uses a straight line to describe the relationship between variables. It finds the line of best fit through your data by searching for the value of the regression coefficient(s) that minimizes the total error of the model.

There are two main types of linear regression:

- Simple linear regression uses only one independent variable

- Multiple linear regressionuses two or more independent variables

In this step-by-step guide, we will walk you through linear regression in R using two sample datasets.

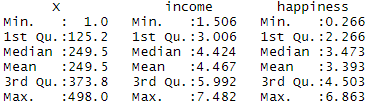

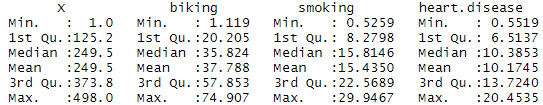

Because both our variables are quantitative, when we run this function we see a table in our console with a numeric summary of the data. This tells us the minimum, median, mean, and maximum values of the independent variable (income) and dependent variable (happiness):

Multiple regression

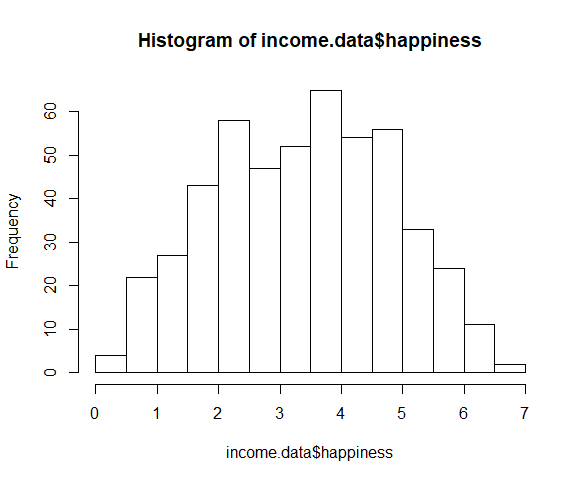

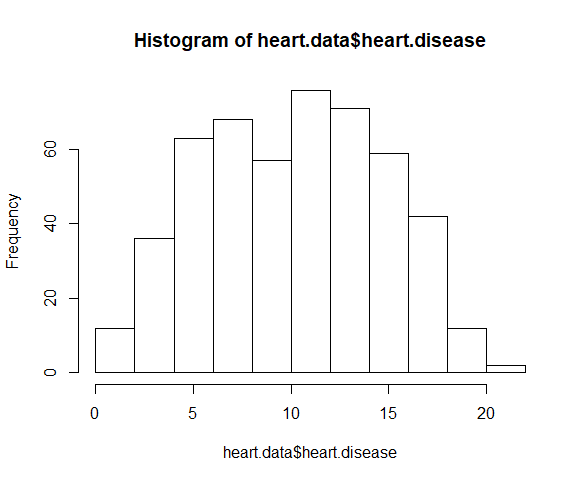

The observations are roughly bell-shaped (more observations in the middle of the distribution, fewer on the tails), so we can proceed with the linear regression.

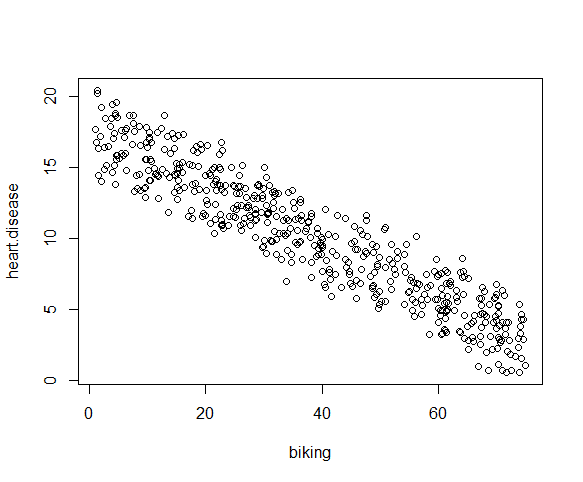

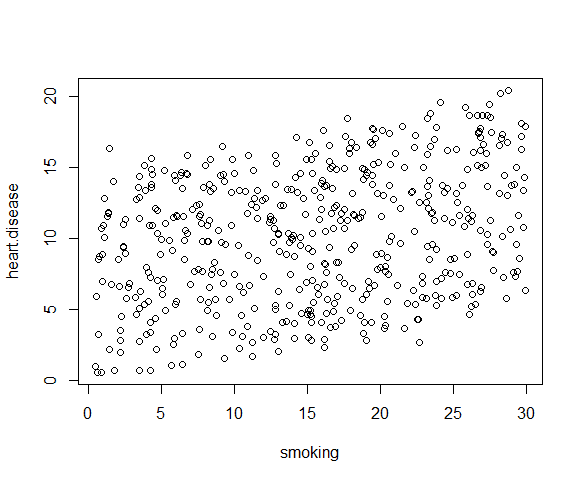

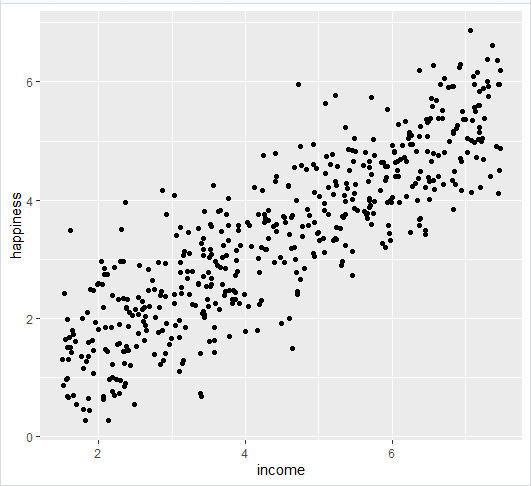

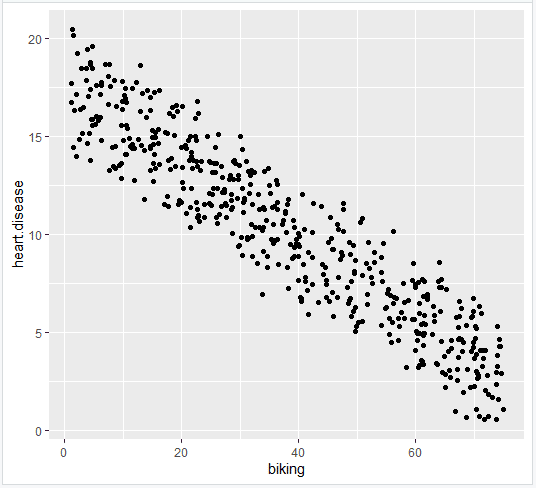

The relationship between the independent and dependent variable must be linear. We can test this visually with a scatter plot to see if the distribution of data points could be described with a straight line.

When we run this code, the output is 0.015. The correlation between biking and smoking is small (0.015 is only a 1.5% correlation), so we can include both parameters in our model.

Use the hist() function to test whether your dependent variable follows a normal distribution.

The output looks like this:

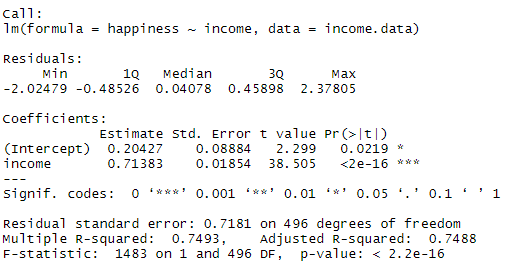

This output table first presents the model equation, then summarizes the model residuals (see step 4).

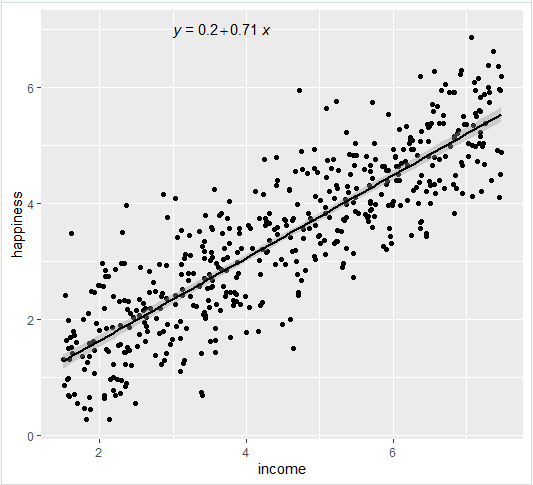

The Coefficients section shows:

- The estimates (Estimate) for the model parameters – the value of the y-intercept (in this case 0.204) and the estimated effect of income on happiness (0.713).

- The standard error of the estimated values (Std. Error).

- The test statistic (t value, in this case the t statistic).

- The p value ( Pr(>| t | ) ), aka the probability of finding the given t statistic if the null hypothesis of no relationship were true.

The final three lines are model diagnostics – the most important thing to note is the p value (here it is 2.2e-16, or almost zero), which will indicate whether the model fits the data well.

From these results, we can say that there is a significant positive relationship between income and happiness (p value < 0.001), with a 0.713-unit (+/- 0.01) increase in happiness for every unit increase in income.

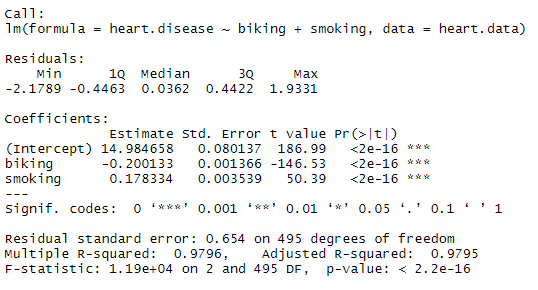

Multiple regression: biking, smoking, and heart disease

Let’s see if there’s a linear relationship between biking to work, smoking, and heart disease in our imaginary survey of 500 towns. The rates of biking to work range between 1 and 75%, rates of smoking between 0.5 and 30%, and rates of heart disease between 0.5% and 20.5%.

To test the relationship, we first fit a linear model with heart disease as the dependent variable and biking and smoking as the independent variables. Run these two lines of code:

Note that the par(mfrow()) command will divide the Plots window into the number of rows and columns specified in the brackets. So par(mfrow=c(2,2)) divides it up into two rows and two columns. To go back to plotting one graph in the entire window, set the parameters again and replace the (2,2) with (1,1).

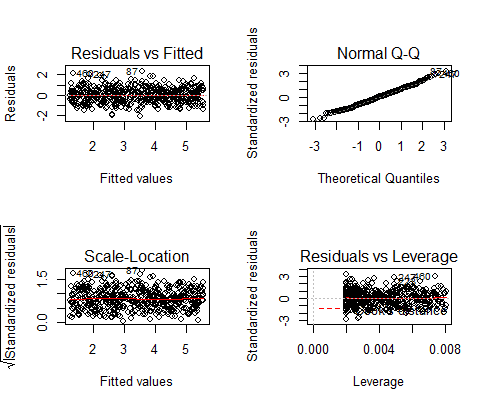

These are the residual plots produced by the code:

Residuals are the unexplained variance. They are not exactly the same as model error, but they are calculated from it, so seeing a bias in the residuals would also indicate a bias in the error.

The most important thing to look for is that the red lines representing the mean of the residuals are all basically horizontal and centered around zero. This means there are no outliers or biases in the data that would make a linear regression invalid.

In the Normal Q-Qplot in the top right, we can see that the real residuals from our model form an almost perfectly one-to-one line with the theoretical residuals from a perfect model.

Based on these residuals, we can say that our model meets the assumption of homoscedasticity.

Multiple regression

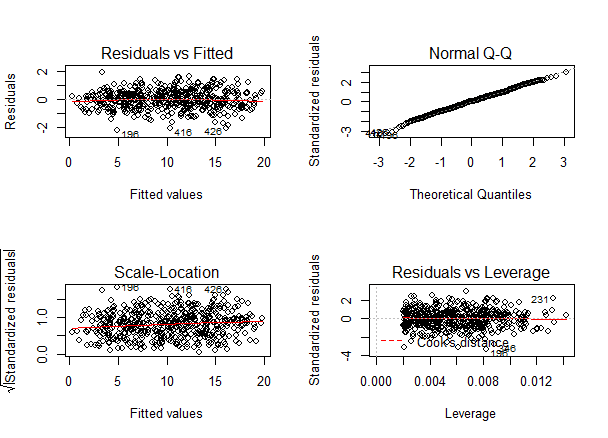

Again, we should check that our model is actually a good fit for the data, and that we don’t have large variation in the model error, by running this code:

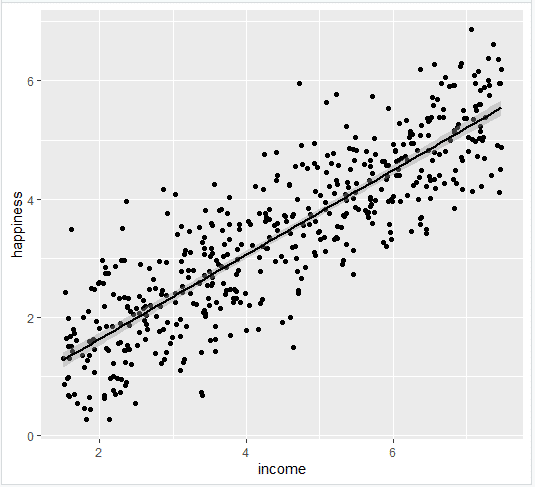

- Add the linear regression line to the plotted data

Add the regression line using geom_smooth() and typing in lm as your method for creating the line. This will add the line of the linear regression as well as the standard error of the estimate (in this case +/- 0.01) as a light grey stripe surrounding the line:

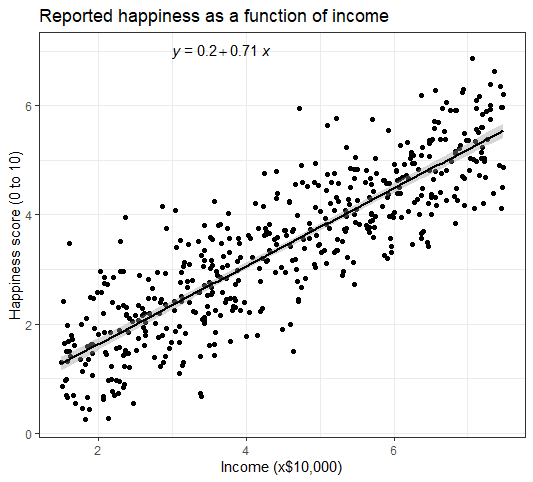

- Make the graph ready for publication

We can add some style parameters using theme_bw() and making custom labels using labs() .

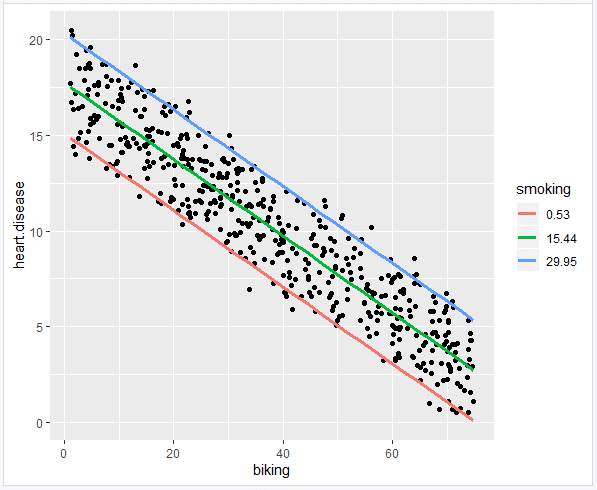

This will not create anything new in your console, but you should see a new data frame appear in the Environment tab. Click on it to view it.

- Predict the values of heart disease based on your linear model

Next we will save our ‘predicted y’ values as a new column in the dataset we just created.

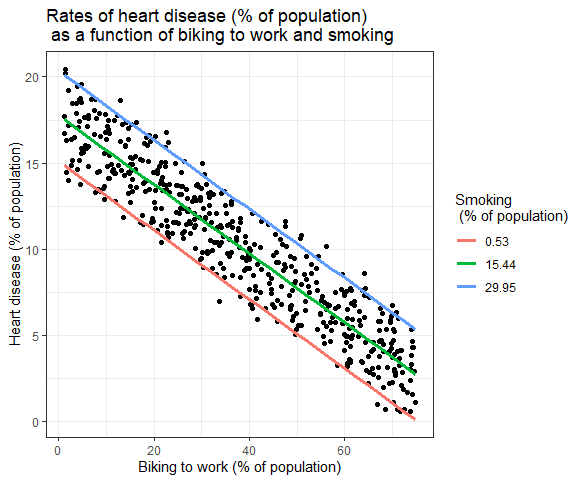

- Make the graph ready for publication

This is the finished graph that you can include in your papers!

Step 6: Report your results

In addition to the graph, include a brief statement explaining the results of the regression model.

Reporting the results of simple linear regression We found a significant relationship between income and happiness (p < 0.001, R 2 = 0.73 ± 0.0193), with a 0.73-unit increase in reported happiness for every $10,000 increase in income. Reporting the results of multiple linear regression In our survey of 500 towns, we found significant relationships between the frequency of biking to work and the frequency of heart disease and the frequency of smoking and frequency of heart disease (p < 0 and p < 0.001, respectively).

Specifically we found a 0.2% decrease (± 0.0014) in the frequency of heart disease for every 1% increase in biking, and a 0.178% increase (± 0.0035) in the frequency of heart disease for every 1% increase in smoking.

Other interesting articles

If you want to know more about statistics, methodology, or research bias, make sure to check out some of our other articles with explanations and examples.

Statistics

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Linear Regression in R | A Step-by-Step Guide and Examples. Scribbr. Retrieved November 8, 2023, from https://www.scribbr.com/statistics/linear-regression-in-r/

Is this article helpful?

You have already voted. Thanks 🙂 Your vote is saved 🙂 Processing your vote.Rebecca Bevans

Rebecca is working on her PhD in soil ecology and spends her free time writing. She’s very happy to be able to nerd out about statistics with all of you.

Other students also liked

Simple Linear Regression | An Easy Introduction and Examples

Simple linear regression is a model that describes the relationship between one dependent and one independent variable using a straight line.

Multiple Linear Regression | A Quick Guide (Examples)

Multiple linear regression is a model for predicting the value of one dependent variable based on two or more independent variables.

Choosing the Right Statistical Test | Types and Examples

Your choice of statistical test depends on the types of variables you’re dealing with and whether your data meets certain assumptions.

ANOVA in R | A Complete Step-by-Step Guide with Examples

Published on March 6, 2020 by Rebecca Bevans. Revised on June 22, 2023.

ANOVA is a statistical test for estimating how a quantitative dependent variable changes according to the levels of one or more categorical independent variables. ANOVA tests whether there is a difference in means of the groups at each level of the independent variable.

The null hypothesis (H0) of the ANOVA is no difference in means, and the alternative hypothesis (Ha) is that the means are different from one another.

In this guide, we will walk you through the process of a one-way ANOVA (one independent variable) and a two-way ANOVA (two independent variables).

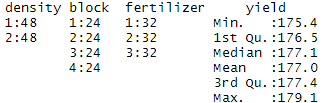

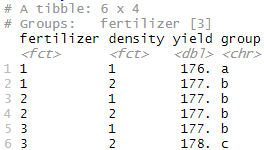

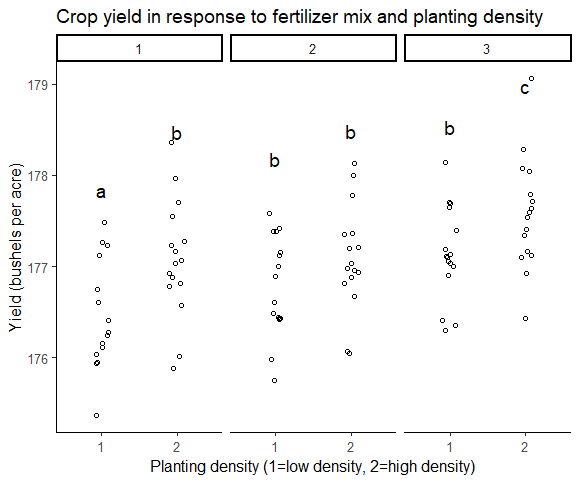

Our sample dataset contains observations from an imaginary study of the effects of fertilizer type and planting density on crop yield.

You should see ‘density’, ‘block’, and ‘fertilizer’ listed as categorical variables with the number of observations at each level (i.e. 48 observations at density 1 and 48 observations at density 2).

‘Yield’ should be a quantitative variable with a numeric summary (minimum, median, mean, maximum).

Step 2: Perform the ANOVA test

ANOVA tests whether any of the group means are different from the overall mean of the data by checking the variance of each individual group against the overall variance of the data. If one or more groups falls outside the range of variation predicted by the null hypothesis (all group means are equal), then the test is statistically significant.

We can perform an ANOVA in R using the aov() function. This will calculate the test statistic for ANOVA and determine whether there is significant variation among the groups formed by the levels of the independent variable.

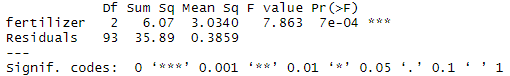

One-way ANOVA

In the one-way ANOVA example, we are modeling crop yield as a function of the type of fertilizer used. First we will use aov() to run the model, then we will use summary() to print the summary of the model.

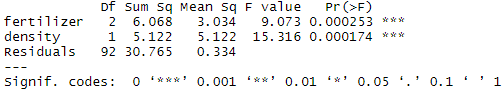

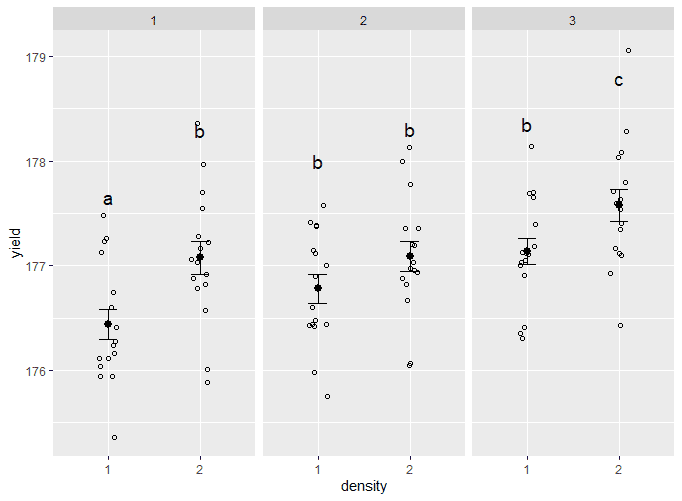

Adding planting density to the model seems to have made the model better: it reduced the residual variance (the residual sum of squares went from 35.89 to 30.765), and both planting density and fertilizer are statistically significant (p-values < 0.001).

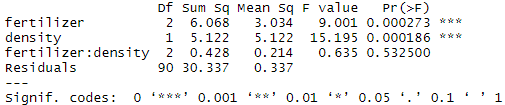

Adding interactions between variables

Sometimes you have reason to think that two of your independent variables have an interaction effect rather than an additive effect.

For example, in our crop yield experiment, it is possible that planting density affects the plants’ ability to take up fertilizer. This might influence the effect of fertilizer type in a way that isn’t accounted for in the two-way model.

To test whether two variables have an interaction effect in ANOVA, simply use an asterisk instead of a plus-sign in the model:

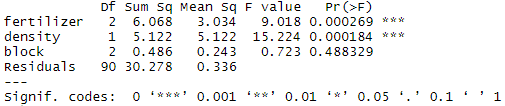

The ‘block’ variable has a low sum-of-squares value (0.486) and a high p value (p = 0.48), so it’s probably not adding much information to the model. It also doesn’t change the sum of squares for the two independent variables, which means that it’s not affecting how much variation in the dependent variable they explain.

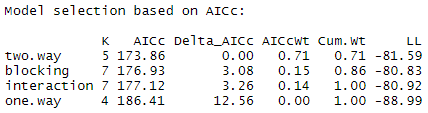

Step 3: Find the best-fit model

There are now four different ANOVA models to explain the data. How do you decide which one to use? Usually you’ll want to use the ‘best-fit’ model – the model that best explains the variation in the dependent variable.

The Akaike information criterion (AIC) is a good test for model fit. AIC calculates the information value of each model by balancing the variation explained against the number of parameters used.

In AIC model selection, we compare the information value of each model and choose the one with the lowest AIC value (a lower number means more information explained!)

The output looks like this:

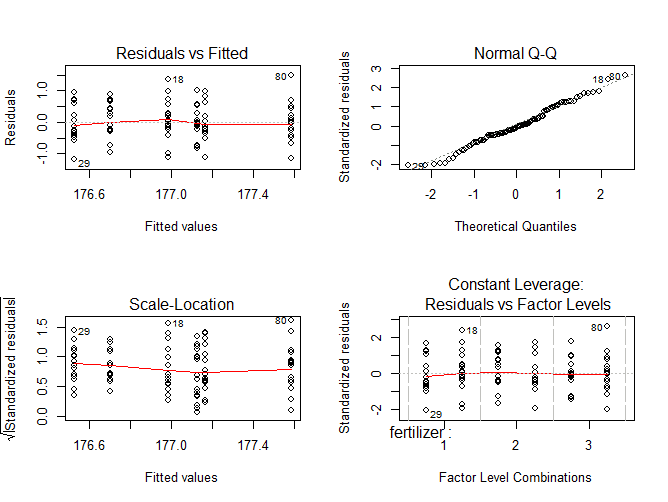

The diagnostic plots show the unexplained variance (residuals) across the range of the observed data.

Each plot gives a specific piece of information about the model fit, but it’s enough to know that the red line representing the mean of the residuals should be horizontal and centered on zero (or on one, in the scale-location plot), meaning that there are no large outliers that would cause research bias in the model.

The normal Q-Q plot plots a regression between the theoretical residuals of a perfectly-homoscedastic model and the actual residuals of your model, so the closer to a slope of 1 this is the better. This Q-Q plot is very close, with only a bit of deviation.

From these diagnostic plots we can say that the model fits the assumption of homoscedasticity.

If your model doesn’t fit the assumption of homoscedasticity, you can try the Kruskall-Wallis test instead.

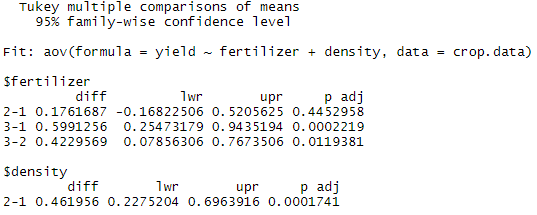

Step 5: Do a post-hoc test

ANOVA tells us if there are differences among group means, but not what the differences are. To find out which groups are statistically different from one another, you can perform a Tukey’s Honestly Significant Difference (Tukey’s HSD) post-hoc test for pairwise comparisons:

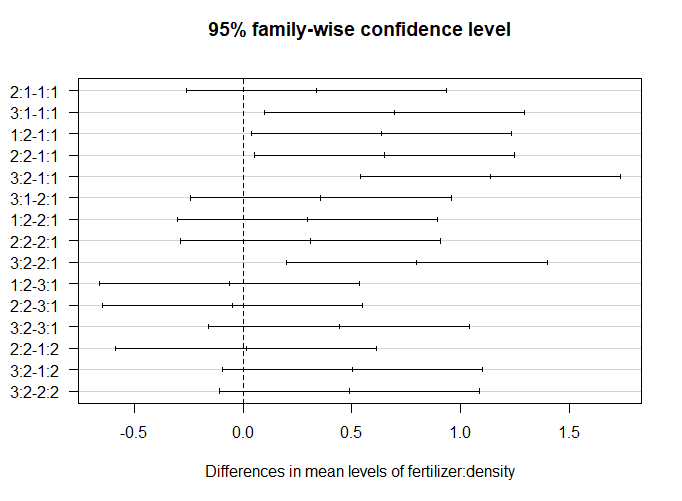

Instead of printing the TukeyHSD results in a table, we’ll do it in a graph.

Next, add the group labels as a new variable in the data frame.

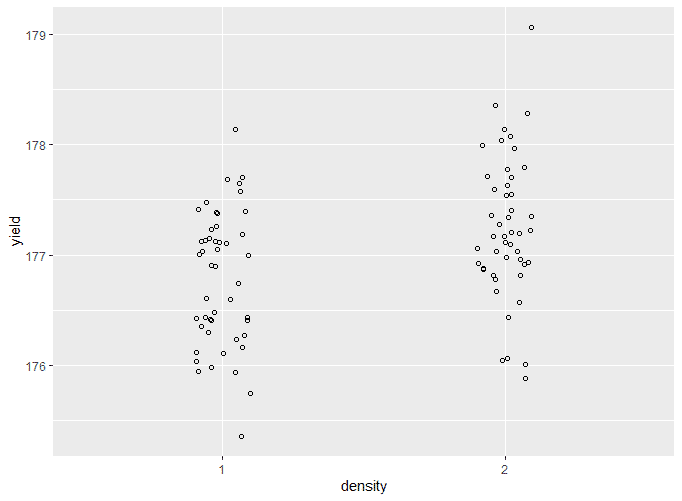

The output looks like this:

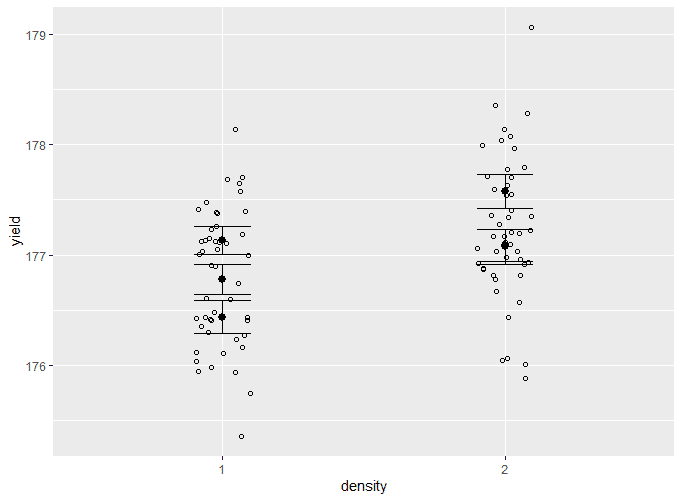

Add the means and standard errors to the graph

The output looks like this:

Make the graph ready for publication

In this step we will remove the grey background and add axis labels.

Other interesting articles

If you want to know more about statistics, methodology, or research bias, make sure to check out some of our other articles with explanations and examples.

Statistics

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

Frequently asked questions about ANOVA

The only difference between one-way and two-way ANOVA is the number of independent variables. A one-way ANOVA has one independent variable, while a two-way ANOVA has two.

- One-way ANOVA: Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- Two-way ANOVA: Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, master’s), and race finishing times in a marathon.

All ANOVAs are designed to test for differences among three or more groups. If you are only testing for a difference between two groups, use a t-test instead.

A factorial ANOVA is any ANOVA that uses more than one categorical independent variable. A two-way ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined effects of vaccination (vaccinated or not vaccinated) and health status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, single, divorced, widowed), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the final weight of chickens in a commercial farming operation.

In ANOVA, the null hypothesis is that there is no difference among group means. If any group differs significantly from the overall group mean, then the ANOVA will report a statistically significant result.

Significant differences among group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained by the independent variable) to the mean square error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

Quantitative variables are any variables where the data represent amounts (e.g. height, weight, or age).

Categorical variables are any variables where the data represent groups. This includes rankings (e.g. finishing places in a race), classifications (e.g. brands of cereal), and binary outcomes (e.g. coin flips).

You need to know what type of variables you are working with to choose the right statistical test for your data and interpret your results.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.